There is great value in examining software processes from an execution perspective. My latest article provides an understanding of the underlying execution principles that make Agile methods effective. Read the full article on infoQ.com.

Food for Thought on Software Engineering & Beyond

Thursday, December 13, 2012

Friday, June 29, 2012

Why prototyping is your best friend

There is no doubt that the single most challenging problem in software engineering is getting the right requirements in order to build the right product.

The problem of requirements occurs for a number of reasons. Very often, the customer does not know what they want exactly. "I want a system that will make our collaboration better," they might say. For any software engineer, this can mean a hundred different things. And even when the customer has a reasonably clear idea as to what they want, the thinking process generally happens in such an abstract way that makes communicating the idea rather difficult. You see the customer talking and using their hands and drawing circles in the air to explain to you how they imagine things, and you know that they have something specific in mind, but they're having a hard time explaining it. And even in rare cases, when the customer can explain what they want really well, the problem of terminology comes to the picture immediately. For one, customers usually (and rightfully) use their own domain language to explain things: "This feature should allow the user to inject the data and put it in clusters." And second, the communication between developers and customers regarding design and implementation has no common ground. You listen to developers talking about a control panel, and a navigation window, and a workspace.. The customer would be nodding their head in agreement. But experience taught us that this nodding usually means one thing: "I get what you are trying to say in principle but I'm not sure if we're talking about the same thing."

Luckily enough, prototyping can conquer all of these problems in a very elegant and smooth way.

Prototyping involves building a quick-and-dirty mock up of how we envision the system to be or look like in the near future (months in the agile world). Especially nowadays, as the focus is moving more and more into building GUI-intensive systems, you might want to consider prototyping the UI without worrying much about the underlying functionality.

How do you start?

Luckily enough, prototyping can conquer all of these problems in a very elegant and smooth way.

Prototyping involves building a quick-and-dirty mock up of how we envision the system to be or look like in the near future (months in the agile world). Especially nowadays, as the focus is moving more and more into building GUI-intensive systems, you might want to consider prototyping the UI without worrying much about the underlying functionality.

How do you start?

- Sit down with the customer and the key developers involved, and try to come up with a few use cases (scenarios) of the system under development. Make sure this happens strictly in the language of the end user not the language of software developers. You don't need to exhaust all possible scenarios - just the main ones for now.

- Give your team the chance to think about it and ask questions. Then, in the same meeting, assign the task of coming up with the first UI prototype to someone (or a pair). This is an essential step, because you need a starting point before you convene again. Otherwise, you will drown in an endless discussion before you could even draw anything on the board.

Also, set a firm and short deadline for this to happen. For example, I prefer the next day as a deadline. This has many advantages. First, prototyping is best when the discussion about the use cases are still fresh in your mind. More importantly, you don't want the person with this task to spend a lot of time trying to perfect the prototype or make it look beautiful because that would be counter-productive in this phase. Also, the product of one-day worth of work is going to look dirty, and this helps in focusing the attention of everyone on scrutinizing the content rather than the look (in this phase), and it helps convey the message that this is a very rough prototype that is in severe need for everybody's input. Finally, we have learnt from our dark history with requirement documentation that the more time and effort we spend on an artifact, the more we will resist changing it later on. Therefore, you don't want the prototypers to get emotionally attached to their initial design. - Convene again and ask the person tasked with prototyping to draw their initial design on a whiteboard, and explain it. A whiteboard is all you need. No computer designs should be involved - again because psychologically we are inclined to resist changing a computer-produced design as opposed to a hand-drawn sketch on the board (aka. low-fidelity prototype). Look at these two prototypes for example:

- Have a few rounds of discussions on the presented prototype, and change it as needed. Depending on how this goes, you may need to spend two to three days on this activity. It is a good idea to break the meeting when you reach a dead end in your discussion or when people are too exhausted. Come the next day with fresh ideas and open the discussions again. Once your prototype starts to change only in the fine details, stop the discussions and move on to the next step.

- The team should spend the next few days on a quick spike to transfer this prototype from the whiteboard to an actual UI everybody can see on a computer screen (i.e. high-fidelity prototype). Use the intended language/platform to prepare this prototype. For example, if this is a web-based application, you might want to use HTML, CSS and Javascript. If it is a WPF application, use C# and so on.

- Only UI elements and the main interactions should be included in the prototype. The underlying functionality (communication with the server, DB connections ... etc) should be ignored for now. To replace the missing functionality, use mock-up objects and files to fill the UI with close-to-real content. Also, the aesthetic details should not be a concern at this point. Remember that this is still a prototype and should stay as such until you decide otherwise.

Did you get your prototype ready? Let me tell you what you have just achieved.

First of all, you created a common vision for the whole team - something that you would otherwise struggle to do. The prototype you created will serve as a point of reference to remind everyone on the team of what it is we want to accomplish. And best of all, this reference prototype will facilitate future meetings and discussions. You will always have it handy wherever you go. Your customer will come to you and talk about this specific window and that specific button. What you did there is share your technical terminology with your customer, and at the same time, you will find yourself starting to talk your customer's language because he/she was involved in making this prototype in the first place. (Read Eric Evans' book on Domain-Driven Design for more on the importance of nomenclature in software development).

From a project management perspective, planning and estimation becomes a much easier job. Given a prototype that - to a reasonable degree - reflects what we aim to achieve, we can now breakdown the work into deliverables, plan releases and iterations, assign resources, assess risk, and set expectations.

Finally, your prototype should always, in my opinion, lend itself as a prototype and nothing more than a prototype. The aesthetics of it, the color schemes, the layout of UI components should not give the impression that it is a final product. Otherwise, you will lose some of the key advantages of building a prototype such as gaining focus. Later on, as your customer becomes less concerned with the general vision, you can always fine tune things to start discussing the design itself.

Your prototype is indeed your best friend, but no matter where you guys hang out, never lose your friend at a beer garden!

From a project management perspective, planning and estimation becomes a much easier job. Given a prototype that - to a reasonable degree - reflects what we aim to achieve, we can now breakdown the work into deliverables, plan releases and iterations, assign resources, assess risk, and set expectations.

Finally, your prototype should always, in my opinion, lend itself as a prototype and nothing more than a prototype. The aesthetics of it, the color schemes, the layout of UI components should not give the impression that it is a final product. Otherwise, you will lose some of the key advantages of building a prototype such as gaining focus. Later on, as your customer becomes less concerned with the general vision, you can always fine tune things to start discussing the design itself.

Your prototype is indeed your best friend, but no matter where you guys hang out, never lose your friend at a beer garden!

Tuesday, May 22, 2012

Your five-minute guide to Agile Software Development

What is Agile Software Development (ASD)?

ASD is a set of principles and practices that allow you to build reliable software systems cheaper and faster than you would using more traditional approaches. The main characteristics of ASD include: incremental and iterative development, heavy customer involvement, and minimal overhead. At the end of the day, using agile methods significantly enhances your chances of building the right product for your customer.

How does it work?

A short cycle of "plan->build->get feedback" repeats over the course of the project until the customer is satisfied that they have received a product they like and deem useful and reliable.

Is the notion of incremental/iterative development new?

No. Incremental/iterative development has been around for a long time (~ 1950s).

Then, how is ASD different?

ASD contributed significantly to promoting iterative development and making it work by incorporating a number of principles and practices that emphasize discipline, efficiency and business-value. Anecdotal evidence suggests a considerable increase in the project success rate compared to older approaches. Before ASD, iterative development had major issues such as:

Footnote: My objective in this post is not to delve into details, but rather to give an overall understanding of what agile methods are, and answer questions I am usually asked. I would like to thank Ted Hellmann and Derar Assi for providing valuable feedback on this post.

ASD is a set of principles and practices that allow you to build reliable software systems cheaper and faster than you would using more traditional approaches. The main characteristics of ASD include: incremental and iterative development, heavy customer involvement, and minimal overhead. At the end of the day, using agile methods significantly enhances your chances of building the right product for your customer.

How does it work?

A short cycle of "plan->build->get feedback" repeats over the course of the project until the customer is satisfied that they have received a product they like and deem useful and reliable.

|

| http://www.mountaingoatsoftware.com/scrum/overview |

Is the notion of incremental/iterative development new?

No. Incremental/iterative development has been around for a long time (~ 1950s).

Then, how is ASD different?

ASD contributed significantly to promoting iterative development and making it work by incorporating a number of principles and practices that emphasize discipline, efficiency and business-value. Anecdotal evidence suggests a considerable increase in the project success rate compared to older approaches. Before ASD, iterative development had major issues such as:

- heavy up-front work (e.g. planning, requirement elicitation, design),

- lengthy iterations (e.g. up to years as in the spiral model),

- considerable overhead (e.g. documentation, processes, modelling).

- Up-front work is minimal. Because at the beginning of the project, we know the least about what we are going to build. Any attempt to pretend to know the requirements at that point is a gamble at its best. Making major decisions at this point is a risk with expensive consequences later in the process. Therefore, in ASD decisions on requirements and design are delayed until the last possible moment.

- Iterations are short. They range anywhere between one week to four weeks. Experts recommend two- to three-week iterations. This gives the team a chance to change course or mitigate risks in due time. It is also a very effective mechanism to get feedback from the customer on a regular basis. Moreover, having a short time-boxed iteration forces the team to think about a measurable deliverable (i.e. working system) with a clear increment to the previous deliverable.

- Anything that does not evidently provide business-value is considered useless overhead. Therefore, practices like documentation and modelling are only done on-demand.

Does ASD lack in planning?

Not at all. If anything, ASD does planning really well. There are three levels of planning: release planning, iteration planning, and daily planning. The beauty of this approach is that you always give your team the chance to adjust based on new information. If you fail, you fail early enough to be able to pick yourself up and continue.

Aren't daily meetings a waste of time?

No. When done right, daily meetings (aka. scrums or stand-up meetings) are a very efficient way to collect feedback about the previous day, and plan more work for the coming day. It should not take a team member more than 1 minute to tell the team: what they did the day before, what they are planning to do in the coming day, and what problems/obstacles they need help with.

In traditional approaches, it is extremely easy for someone to take a free ride for a long time until the team eventually realizes that these team members are not contributing anything! In ASD, daily updates prevents this from happening or at least exposes people who attempt to play such games. Having said that, it is important to point out that doing daily scrums does not make you Agile (as some managers seem to think). It is only one key part of a larger framework.

Is it true that Agile teams produce no documentation/design artifacts?

No. Almost every team will need to produce some kind of an artifact that might reflect the system architecture, the DB schema, the object model ... etc. What Agile teams do not do though is produce lengthy requirement documents that no one reads. If detailed documentation is necessary for accreditation/regulation/safety purposes, there is no question that it will be done as required. Agile teams also do not produce low level design artifacts unless they are really necessary for the reasons mentioned before. The key issue with artifacts is not actually producing them but keeping them up-to-date. An outdated requirement or design artifact might actually be more harmful than no artifacts at all.

Is Agile only appropriate for small enterprises and start-ups?

While ASD is especially good for small enterprises and start-up businesses, ASD has also been successfully practiced in major IT corporations such as IBM, Google, and Microsoft. Having said that, it is important to note that scaling agile methods continues to be a challenge. If you decide to use agile methods in a large organization, it is strongly recommended that you seek some serious consultation to solve possible inefficiencies (e.g. large and long planning meetings, communication with outsourced teams, design sessions ... etc). Even if you work in a small-to-medium organization, you may need to consult experts on how to overcome other adoption barriers such as the impeding culture of traditional development and the resistance of middle and upper management.

Everyone claims they're Agile. How would I know?

There are many different Agile methods and practices out there, and there are many different opinions as to what the minimum requirements are for agility. Generally speaking, you should expect Agile teams to maintain the following as a minimum:

ASD in general does not support the idea of a complete separation of testing and development. In that sense, some QA roles might be eliminated in an effective Agile team. Nonetheless, the absence of the role does not mean the job itself is not accomplished. Every developer is responsible for producing automated tests that prove the correctness of their code (as in test-driven development). As a QA, if you're doing something that can be done using an automation tool, then you may want to rethink your career. Nonetheless, many teams will still need security testers, usability testers, performance testers ... etc. In an Agile culture, these testers need to be well integrated into the team if at all possible.

Why is it that developers have to sit in an open environment not in their own private work spaces/offices?

After decades of building software - and often failing miserably at it - we have come to learn that communication is key to the success of any software development process. Placing team members in offices or closed cubicles has been found to be an inhibitor to effective communication. Team members find it easier to use emails or documents to communicate their questions or ideas or concerns compared to traveling between offices and floors to have a face-to-face conversation.

By seating everyone in an open space, however, communication becomes significantly easier. Synchronous communication starts to occur in a very smooth and casual manner. A phenomenon known as osmotic communication starts to happen as people overhear conversations that would eventually affect their tasks in a way or another. If a build has failed, everyone on the team knows. If the customer is entertaining some thoughts about a change in the course of the project, everyone is aware of that. No secrets. No hidden agendas. And no forgotten reports, ignored emails or delayed responses.

Needless to say, if the Agile team is distributed, then communication becomes an issue that needs to be addressed.

What is the state of ASD today?

I strongly recommend that you read the State of Agile Development Survey Results (6,042 responses).

Where can I get more information?

Not at all. If anything, ASD does planning really well. There are three levels of planning: release planning, iteration planning, and daily planning. The beauty of this approach is that you always give your team the chance to adjust based on new information. If you fail, you fail early enough to be able to pick yourself up and continue.

Aren't daily meetings a waste of time?

No. When done right, daily meetings (aka. scrums or stand-up meetings) are a very efficient way to collect feedback about the previous day, and plan more work for the coming day. It should not take a team member more than 1 minute to tell the team: what they did the day before, what they are planning to do in the coming day, and what problems/obstacles they need help with.

In traditional approaches, it is extremely easy for someone to take a free ride for a long time until the team eventually realizes that these team members are not contributing anything! In ASD, daily updates prevents this from happening or at least exposes people who attempt to play such games. Having said that, it is important to point out that doing daily scrums does not make you Agile (as some managers seem to think). It is only one key part of a larger framework.

Is it true that Agile teams produce no documentation/design artifacts?

No. Almost every team will need to produce some kind of an artifact that might reflect the system architecture, the DB schema, the object model ... etc. What Agile teams do not do though is produce lengthy requirement documents that no one reads. If detailed documentation is necessary for accreditation/regulation/safety purposes, there is no question that it will be done as required. Agile teams also do not produce low level design artifacts unless they are really necessary for the reasons mentioned before. The key issue with artifacts is not actually producing them but keeping them up-to-date. An outdated requirement or design artifact might actually be more harmful than no artifacts at all.

Is Agile only appropriate for small enterprises and start-ups?

While ASD is especially good for small enterprises and start-up businesses, ASD has also been successfully practiced in major IT corporations such as IBM, Google, and Microsoft. Having said that, it is important to note that scaling agile methods continues to be a challenge. If you decide to use agile methods in a large organization, it is strongly recommended that you seek some serious consultation to solve possible inefficiencies (e.g. large and long planning meetings, communication with outsourced teams, design sessions ... etc). Even if you work in a small-to-medium organization, you may need to consult experts on how to overcome other adoption barriers such as the impeding culture of traditional development and the resistance of middle and upper management.

Everyone claims they're Agile. How would I know?

There are many different Agile methods and practices out there, and there are many different opinions as to what the minimum requirements are for agility. Generally speaking, you should expect Agile teams to maintain the following as a minimum:

- Heavy customer involvement (especially in release planning meetings).

- Regular update meetings (preferably daily).

- Regular releases of working software (every month or so).

- A development environment that supports continuous and automated testing and integration.

ASD in general does not support the idea of a complete separation of testing and development. In that sense, some QA roles might be eliminated in an effective Agile team. Nonetheless, the absence of the role does not mean the job itself is not accomplished. Every developer is responsible for producing automated tests that prove the correctness of their code (as in test-driven development). As a QA, if you're doing something that can be done using an automation tool, then you may want to rethink your career. Nonetheless, many teams will still need security testers, usability testers, performance testers ... etc. In an Agile culture, these testers need to be well integrated into the team if at all possible.

Why is it that developers have to sit in an open environment not in their own private work spaces/offices?

After decades of building software - and often failing miserably at it - we have come to learn that communication is key to the success of any software development process. Placing team members in offices or closed cubicles has been found to be an inhibitor to effective communication. Team members find it easier to use emails or documents to communicate their questions or ideas or concerns compared to traveling between offices and floors to have a face-to-face conversation.

By seating everyone in an open space, however, communication becomes significantly easier. Synchronous communication starts to occur in a very smooth and casual manner. A phenomenon known as osmotic communication starts to happen as people overhear conversations that would eventually affect their tasks in a way or another. If a build has failed, everyone on the team knows. If the customer is entertaining some thoughts about a change in the course of the project, everyone is aware of that. No secrets. No hidden agendas. And no forgotten reports, ignored emails or delayed responses.

Needless to say, if the Agile team is distributed, then communication becomes an issue that needs to be addressed.

What is the state of ASD today?

I strongly recommend that you read the State of Agile Development Survey Results (6,042 responses).

Where can I get more information?

Many books and blogs are available to help you get started. A list of the books and blogs I would recommend as a start is below. Good luck!

Books:

Books:

- Agile Software Development: Principles, Patterns and Practices by Robert C. Martin

- Refactoring: Improving the Design of Existing Code by Martin Fowler

- User Stories Applied: For Agile Software Development by Mike Cohn

- Test Driven Development: By Example by Kent Beck

- Extreme Programming Explained: Embrace Change by Kent Beck

- Agile Estimating and Planning by Mike Cohn

- Clean Code: A Handbook of Agile Software Craftsmanship by Robert C. Martin

Blogs:

- http://alistair.cockburn.us/Blog

- http://www.agilemodeling.com/essays

- http://martinfowler.com/agile.html

- http://www.mountaingoatsoftware.com/blog

- http://www.infoq.com/agile/

- http://xprogramming.com/category/articles

- Find a rich list of blogs here: http://agilescout.com/top-agile-blogs-200/

Footnote: My objective in this post is not to delve into details, but rather to give an overall understanding of what agile methods are, and answer questions I am usually asked. I would like to thank Ted Hellmann and Derar Assi for providing valuable feedback on this post.

Wednesday, May 2, 2012

Ads spamming your Facebook Timeline? Do something about it.

Are you experiencing a large volume of annoying/offensive ads on your Facebook Timeline? Have you seen any ad like this on Facebook, YouTube, Yahoo or any other website?

"ads not by Facebook" or more generally "ads not by this site" is a strong indication that your computer is under an Adware attack. This kind of Adware hijacks your browser in order to alter the content of the web pages you see by adding advertisements without your permission and without the permission of the website you are visiting. To be fair, these Adwares do actually get your permission in one way or another, but they do it in a very subtle way so that you won't even remember when and how it happened. For example, when you install an extension/add-on/plugin/toolbar for Chrome or Firefox, you are willingly giving control to that extension over the contents of the pages you visit. Some extensions take advantage of this trust and start putting their own ads to generate revenue. These ads will get very annoying as you start seeing more and more of them on your Facebook timeline or on YouTube - especially when they include content you don't approve of such as gambling ads, adult content, and money scams. If you have kids using the computer, the problem becomes even more serious, because such ads cannot be easily moderated.

So what do you do about this?

You simply need to remove the extensions that cause these ads to display. After all, this is a breach of trust. In my humble opinion, a self-respecting extension provider should make it very clear to you (before you even install the extension) that the extension intends to generate revenue through putting ads on your browser. But if they don't make that clear, then maybe they are also doing more malicious activities in your computer such as stealing information and logging your browsing or keyboard activities. You never know.

Some advertisers will be gracious enough to let you know that their extension is the reason you are seeing the ad. In this case, all you have to do is go to the settings of your web browser and remove the extension.

(Tip for Chrome users: type this into the URL field chrome://settings/extensions)

However, in many cases, you have no idea where the ad is coming from. Your best bet in this case is to actually do a trial and error experiment until you find the extension. That is, remove one extension at a time, restart your browser, and go to the page that displayed the malicious ads previously. If the ads persist, then the extension you just removed is not the source of the problem. If the ads are gone, then you're good! From my own experience, after a number of trials, I found that the extension that spammed my browser with all kinds of ads was "Media plugin" - version 2.0.

|

paintsthefuture.com

|

"ads not by Facebook" or more generally "ads not by this site" is a strong indication that your computer is under an Adware attack. This kind of Adware hijacks your browser in order to alter the content of the web pages you see by adding advertisements without your permission and without the permission of the website you are visiting. To be fair, these Adwares do actually get your permission in one way or another, but they do it in a very subtle way so that you won't even remember when and how it happened. For example, when you install an extension/add-on/plugin/toolbar for Chrome or Firefox, you are willingly giving control to that extension over the contents of the pages you visit. Some extensions take advantage of this trust and start putting their own ads to generate revenue. These ads will get very annoying as you start seeing more and more of them on your Facebook timeline or on YouTube - especially when they include content you don't approve of such as gambling ads, adult content, and money scams. If you have kids using the computer, the problem becomes even more serious, because such ads cannot be easily moderated.

So what do you do about this?

You simply need to remove the extensions that cause these ads to display. After all, this is a breach of trust. In my humble opinion, a self-respecting extension provider should make it very clear to you (before you even install the extension) that the extension intends to generate revenue through putting ads on your browser. But if they don't make that clear, then maybe they are also doing more malicious activities in your computer such as stealing information and logging your browsing or keyboard activities. You never know.

Some advertisers will be gracious enough to let you know that their extension is the reason you are seeing the ad. In this case, all you have to do is go to the settings of your web browser and remove the extension.

(Tip for Chrome users: type this into the URL field chrome://settings/extensions)

However, in many cases, you have no idea where the ad is coming from. Your best bet in this case is to actually do a trial and error experiment until you find the extension. That is, remove one extension at a time, restart your browser, and go to the page that displayed the malicious ads previously. If the ads persist, then the extension you just removed is not the source of the problem. If the ads are gone, then you're good! From my own experience, after a number of trials, I found that the extension that spammed my browser with all kinds of ads was "Media plugin" - version 2.0.

Thursday, April 26, 2012

The Education Crisis: When universities become a waste of time!

Controversy was sparked earlier this month when the University of Florida (UF) allegedly considered a proposal to eliminate the computer science department. While the UF officials maintained that this was not their plan and that they were only trying to make necessary cuts, others argued that the proposal was nothing short of an attempt to systematically close down the computer science program.

Whether the UF was actually planning to close the computer science program or not is really not what I am interested in. What really interests me is the fact that educational institutions - and especially technical programs - have increasingly become a topic of discussion as far as financial cuts are concerned. And whether we like to admit it or not, this is a strong signal that the overall sentiment towards universities is not that positive. At the end of the day, this is an economic debate, and it all boils down to the economic value of universities as perceived by governments and legislators. So it is only appropriate to ask: Are universities doing a good job from an economic point of view? More specifically: Are universities producing job-ready students who are able to contribute to the economy?

We need an intervention.

Whether the UF was actually planning to close the computer science program or not is really not what I am interested in. What really interests me is the fact that educational institutions - and especially technical programs - have increasingly become a topic of discussion as far as financial cuts are concerned. And whether we like to admit it or not, this is a strong signal that the overall sentiment towards universities is not that positive. At the end of the day, this is an economic debate, and it all boils down to the economic value of universities as perceived by governments and legislators. So it is only appropriate to ask: Are universities doing a good job from an economic point of view? More specifically: Are universities producing job-ready students who are able to contribute to the economy?

(1)

It is often argued that it is not the university’s job to

prepare individuals for a job, but rather to give them the

necessary soft skills to do well in their future jobs. Advocates of this school

of thought promote the idea that universities are mainly there to teach

students how to learn, how to do research, and how to work and communicate with

others. On the opposite side of this debate, some argue that universities

should provide students with relevant and practical knowledge that will allow

them – once they graduate – to hunt a job quickly and excel in it.

Universities, they continue, should be able to teach the students the things

they will experience and have to deal with in a real job.

Universities themselves seem to be confused as to why they

really exist. If they exist to give you practical knowledge, then they are

definitely not doing a good job. Pick any university graduate and examine their

readiness to take on a real job and you will be shocked. Ask an electrical

engineering graduate who spent four or five years learning "electrical engineering" concepts to design an electric circuit for a small house and be darn sure not to

come close to it - because chances are it would be a safety hazard. Unfortunately, graduates from other engineering disciplines as well as computer science and information systems are not any better.

On the other hand, if universities are supposed to teach you

how to gain knowledge – or to put it in fancier terms, universities do not give

you fish but they teach you how to catch fish – then, once again, that is obviously not what

they are doing. You can do a simple experiment by looking at the exams students

have to pass to be able to graduate with a degree in something. You will notice

that passing most exams is highly correlated with the ability of the students

to recall the knowledge we have provided in class – and not their ability to research or/and gain new knowledge.

I argue that universities can do both but I am not

delusional enough to believe that they already do.

Something is fundamentally wrong when we keep students for

four to six years in a university program, and then tell them – with no regrets – that what they will see in a real work environment is nothing like what they

have learnt or practiced at university. What a waste of time! Maybe then we

should not be surprised when we know that new high school graduates are

willingly opting for technical institutes or community colleges. There, you

spend two years learning something that is actually useful in real life, and

your chances are higher at landing a job once you are done. At a technical

institute, they don’t waste the first few weeks of the semester telling you

about the history of organic chemistry. They don’t teach you four different

methods to do something, and then tell you that none of them is actually used

in today’s practices. They teach you things that matter, things that are still

relevant in this day and time, things that you will actually use once you

graduate and start your job.

(2)

I fully understand and appreciate the difficulty of taking a

hands-on approach to education while maintaining a level of abstraction to

prepare students to become thinkers and self-learners. But I also think that

putting students in a bubble of abstractions and non-relevance for four years –

if at all they make it to the fourth year – is the main reason students are

usually not ready to take on a real job once this bubble has burst.

We need an intervention.

And we need to start off by trying to overcome the denial

problem we have. As educators and administrators of educational institutes, we

need to acknowledge that there is a serious problem in the system and it better

be fixed before it is too late. We need to admit that universities are not

generally able to achieve the goals we hope they would. We have to ask why – in

a considerable number of institutes in Canada – it is taking six years to

graduate only 1 of every 3 students who enroll fulltime. Student dropout rates

from technical programs are also skyrocketing at many universities. We need to

submit that the problem may well not be the students themselves, but the system

in which these students feel irrelevant, unachieved, or overwhelmed. We also need to

realize that our universities may not be coping well with the ever changing

demands of the job market, and that by itself is enough reason for not taking

the university route.

The other thing we need to do is study the curricula of

colleges and technical institutes and learn – yes learn – how they manage to

graduate students in two years who may land a job even before they go on stage

to get their degree. It is truly mindboggling – and very embarrassing – when you

hear stories of people (smart people) who actually had to do a one- or two-year

program at a local institute after they had graduated from university so that

they may be ready to compete for jobs in highly competitive markets!!

The third step is to revisit our hiring approach. We need to

handpick educators who understand the value of giving relevant knowledge, who share

the vision of job-ready students, and who know how to make it happen in the

classroom. We need to put more effort into making the classroom more

interesting, more hands-on, and intellectually rewarding. At the same time, we

need to set standards for our educators. We need to live in peace with the fact

that not every academic is a good instructor. We should throw the ‘a-good-researcher-can-also-teach’

philosophy out the window, and instead open the door for creativity in the

classroom and reward it. Instructors should be in the classroom because they

want to be there, not because the university forces them to be there (Footnote: most universities require that all professors teach a

certain load of courses per year).

(3)

A few years back, I watched a video of a professor at MIT

who installed a pendulum in the classroom and actually rode the ball at the

end of the string. And while the string was swinging back and forth, you could

see the instructor going back and forth with it. The scene was hysterical. The

students were laughing in disbelief as the instructor was swinging back and

forth and his stopwatch was counting the seconds. The instructor proved to the

students that the time he spent on the swing was the same time calculated by the

formula written on the blackboard, and then he concluded his act with a very

proud statement: “Physics works. I’m telling you.” At that point I envied those

students. I so wanted to be in that classroom sharing the hype and enjoying the

science. The science I can understand and relate to. The science that sticks

with me forever, not the science I can only store in my brain for a few months

until I have the opportunity to throw it up on an exam paper. Having watched

this amazing lecture, I asked myself: if we had a similar learning environment

at all universities, would we have any student in the classroom that would be

yawning, or facebooking, or youtubing or impatiently staring at their watch? Would

any student leave the lecture not fully understanding physics? Would any of our

students not be so eager to attend a second and a third and a fourth lecture –

willingly if I may add?

We would not be exaggerating if we described the current situation of our universities as an education crisis. A crisis that is very expensive because - as cliché as it may sound- it touches the very essence of what makes civilizations flourish and advance – the young minds, the builders of the future. But I also believe that we are able to get past this stage. And we can, with enough societal and governmental momentum, steer our educational system in the right direction. A better education system means a readier workforce. And a readier workforce means less post-graduate training and less waste of time and money. A better education system is our ticket to a healthier economy and a more promising future. Maybe only then, a proposal to eliminate a computer science program will sound completely outrageous and not even make it to the round table.

Friday, April 20, 2012

Is it just me or is this door stupid?

Have you ever tried to open an exit door like the one in the picture and pushed the wrong side of the handle? Bad news is it feels very stupid when you can't open a simple door from the first attempt. Good news is you are not alone!

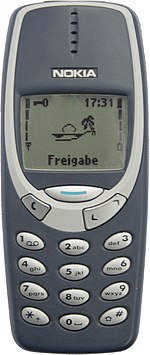

Until a very recent point of time, cognitive psychology had promoted the idea that humans are reactive creatures who behaved according to a stimulus-response mechanism. For example, when you see a 'Press any key to continue' message, your react (or respond) to the message by actually pressing any key. It's an immediate reaction to a sudden stimulus. This notion has significantly influenced the design of things around us, computer devices and software included. To illustrate this in more depth, let's go one decade back in time. Remember the good old Nokia 3310?

Here is how you would send an SMS using Nokia 3310 (and most of other old devices):

1) You go to Messages - as a response to seeing the Messages icon on the screen.

2) You write the message - as a response to seeing the large text box and a blinking cursor.

3) Once you're done, you go to 'Options' - as a response to seeing the 'Options' button.

4) Then you hit Send - as a response to seeing the send option.

5) Finally, you key in the number of the intended recipient for the message - as a response to seeing the small rectangular box that says 'number'. (or you choose a recipient from your phone book).

You can see that every step in the process is based on the assumption that if a stimulus is strong enough to trigger some behavior, then the user will respond in the correct way.

For certain things, the stimulus-response mechanism has been employed very effectively - until today. For instance, red and green colors have been consistently used to signal unsafe and safe operations respectively. Almost every new phone uses the green color to 'Answer' a call and the red to 'Reject/Decline' a call.

Nonetheless, the assumption that everything could be designed based on stimulus-response turned out to be overambitious. It is unreasonable to expect all humans to respond to a given stimulus in the same exact way. The burden is on the developer or/and the designer to ensure that the stimulus is strong enough so that most users may respond in the intended way. And unless the stimuli were somewhat universal (such as red and green), that was simply too much to ask. So what is the solution?

Well.. It turns out that humans are not only reactive after all. They are also intelligent proactive beings. Cognitive psychology turned upside down when we came to the realization that humans do not simply react to stimuli, but rather build expectations, anticipate events, and most importantly create mental models. And that is precisely why humans complain about things when they do not align well with their mental models. For example, before you open a water tap, you build a mental model of the path of the water. Once the water starts running, it should pour right into the sink. When it doesn't, you realize that something is off.

Now that is a very simple example, but it highlights a very important principle in the psychology of engineering. Once we absorb the concept of mental models, we will be able to understand why in the old days some people called call centers to ask where to find the 'any' button on their keyboard. We will understand why when many people see a big door handle, they do not know where to push. In their mental model, a door has to rotate around an axis on either side of the door. And unless this side is clearly marked (like in the picture below), it is difficult to know where you should apply the force to push. Needless to say, if you have to write instructions for opening a door, then maybe your design is simply stupid.

The key difference is that with newer models steps 2 and 5 are swapped. You no longer type the message and then choose the recipient. You do it the other way around. Because, according to our mental model of communication, we almost always think about the recipient before we think about the exact content of the message. "I want to text Sam to see how he is doing," "I want to tell Sara that the meeting is cancelled," "I will tell everyone about the party"...etc.

In the next post of the usability series, we will expand on this concept a little bit more and look at its practical applications in building user interfaces.

|

| http://i01.i.aliimg.com/photo/v0/106222079/FIRE_EXIT_DOOR.jpg |

|

| Nokia 3310 - http://upload.wikimedia.org/wikipedia/commons/thumb/3/31/Nokia_3310_blue.jpg/150px-Nokia_3310_blue.jpg |

1) You go to Messages - as a response to seeing the Messages icon on the screen.

2) You write the message - as a response to seeing the large text box and a blinking cursor.

3) Once you're done, you go to 'Options' - as a response to seeing the 'Options' button.

4) Then you hit Send - as a response to seeing the send option.

5) Finally, you key in the number of the intended recipient for the message - as a response to seeing the small rectangular box that says 'number'. (or you choose a recipient from your phone book).

You can see that every step in the process is based on the assumption that if a stimulus is strong enough to trigger some behavior, then the user will respond in the correct way.

For certain things, the stimulus-response mechanism has been employed very effectively - until today. For instance, red and green colors have been consistently used to signal unsafe and safe operations respectively. Almost every new phone uses the green color to 'Answer' a call and the red to 'Reject/Decline' a call.

|

| HTC - http://htcevo3dtips.com/wp-content/uploads/2011/09/HTC-EVO-3D-answer-call.jpg |

|

| iPhone - http://www.filecluster.com/reviews/wp-content/uploads/2008/11/iphone_fake_calls.jpg |

|

| Nokia - http://dailymobile.se/wp-content/uploads/2011/06/incoming_call21.jpg |

Well.. It turns out that humans are not only reactive after all. They are also intelligent proactive beings. Cognitive psychology turned upside down when we came to the realization that humans do not simply react to stimuli, but rather build expectations, anticipate events, and most importantly create mental models. And that is precisely why humans complain about things when they do not align well with their mental models. For example, before you open a water tap, you build a mental model of the path of the water. Once the water starts running, it should pour right into the sink. When it doesn't, you realize that something is off.

|

| http://pic.epicfail.com/wp-content/uploads/2009/07/sink-fail.jpg |

|

| Don Norman & The Design of Everyday Things |

At this point, you should also be able to explain why new phones handle sending messages the way they do. Using an iPhone or virtually any new mobile device (including Nokia devices), you will notice that the process of sending a message is pretty much the same except for one major thing. Can you guess what has changed?

The key difference is that with newer models steps 2 and 5 are swapped. You no longer type the message and then choose the recipient. You do it the other way around. Because, according to our mental model of communication, we almost always think about the recipient before we think about the exact content of the message. "I want to text Sam to see how he is doing," "I want to tell Sara that the meeting is cancelled," "I will tell everyone about the party"...etc.

In the next post of the usability series, we will expand on this concept a little bit more and look at its practical applications in building user interfaces.

Tuesday, April 17, 2012

When "functional" is not good enough: How usability can make you fly or make you die..

In the late 1990s, IBM introduced the IBM RealPhone - a software application that allowed users to make phone calls from their computers. The phone was called 'real' because.. well.. it just was. It looked real. It had a handset and a dial pad and a digital rectangular screen - everything you would expect on a 'real' phone. But did it feel real?

|

| http://homepage.mac.com/bradster/iarchitect/phone.htm |

In the older days of computing, usability had been completely overshadowed by functionality. People were impressed with what computers had to offer - so much so that they did not care if it took them twenty steps to accomplish a given task, or if they had to wait for hours to calculate a number, or if they had to punch sixteen long queries to do a simple search. All what mattered was that the computer was able to accomplish the task. Of course, let alone other important factors such as the immaturity of input and display technologies.

Not long after this initial period of fascination, the story of computers started to take a drastic turn. The personal computer started to take its place in almost every household. We had mouse devices, keyboards, and colored displays that pretty much started a revolution in graphical user interfaces. Software builders began to think about interfaces more critically and some - like Steve Jobs - more aesthetically. Functionality was no longer the only concern. People started to take usability more seriously. And as competition heated up between different software rivals, end-users got spoiled and very picky about the software they chose for document editing, graphics design, media playing ... etc. Rarely could any company get away with a poorly designed software just because it was functional. New domains started to emerge such as interaction design and usability testing. Gurus such as Jakob Nielsen outlined usability principles and design guidelines that proved to be very useful and practical for years to come.

We have to admit that we have come a long way from being in what I call the infant-fascination stage when we were wowed by the mere fact that computers could do something for us - just like when infants are fascinated by the mere fact that they have discovered their hands and feet. But we also need to recognize that, in this day and age, there are even more dramatic changes taking place at a very fast pace. With the advent of touch devices like the iPad and most smart phones, digital tabletops like Microsoft Surface, and the absolutely mind-blowing input technology such as Microsoft Kinect, we find ourselves puzzled by how the usability status-quo could possibly cope with these changes. Design principles that applied to mouse-based user interfaces cannot be applied as is to touch-based interfaces. A fingertip is probably thousands of times bigger than a mouse cursor or a stylus head. Windows, menus, scroll bars are only a few examples of all the things we need to revisit in order to build truly usable interfaces for orientation-agnostic devices such as digital tabletops.

The challenge of usability has always been there to stay. And especially nowadays, it seems to be where the competitive advantage is. If you build aesthetically pleasing devices (if you're building hardware at all), with intuitive user interactions and a simple interface, then your chances are undoubtedly higher at grabbing the lion's share of the market in any given domain. Apple's iPod is a prime example of that. Google's search engine is another example. Neither of these examples were the first-to-market in their domains. If anything, Google actually came very late to the market of search engines. The iPhone was not even the second- or the third-to-market in the domain of smart phones, but Apple still managed to sell a grand total of 100 million devices in a few years! I would actually go further to state that usability has now become a more important factor than functionality. Just think about the specs of the Apple's iPod compared to those of Microsoft's Zune. The latter was superior in many ways - but definitely not in aesthetics and usability. After generations of improved Zune devices, Microsoft could not get more than roughly 3% of the market compared to about 70% for Apple (50% of which were new customers - just to avoid going into the 'loyalty' argument). In 2011, Microsoft was finally courageous enough to put an end to the troubled journey of its Zune and pull the plug on the whole idea of competing with the iPod.

Now that we have established that usability does provide a business value (of course if you consider selling 100 million devices a business value), then we can talk about what it is you can do as a software practitioner or as a software company to not only build usable systems, but also promote a culture of looking at usability as a competitive advantage and even as a business niche.

Stay tuned for more posts on the "usability series"!

Tuesday, April 10, 2012

What makes a good interview question

Different interviewers have different styles of asking questions. Especially when it comes to technical interviews, the interviewer usually has more freedom to decide as to what qualifies as a good question. In this post, I would like to talk a little bit about what I think makes a good question.

In the wide spectrum of interview questions, we can distinguish three different areas that interviewers like to test: fundamentals, technology-specific issues, and problem-solving skills. Depending on the interviewer's style, you may see more or less focus on all three areas. Let's take programming as an example. Questions about fundamentals (i.e. theory) involve topics like object-oriented programming, recursion, data structures... etc. Technology-specific questions on the other hand tackle things like garbage collection in Java, delegates in C#, pointers in C++, update and draw in the XNA Framework .. etc. Lastly, to test problem-solving skills, you see questions that combine the application of fundamentals in solving a relatively abstract problem such as finding all the possible moves in a chessboard.

So, the question is how much focus should we give each of the three areas to determine if the candidate is a good fit for the job (technically speaking)?

Fundamentals

Fundamental questions are important because they tell you something about the background of the person at the other end of the table. If the person cannot tell you what a class is, or what we use inheritance for, then maybe they are interviewing for the wrong job. Having said that, I believe that we generally overdo it when it comes to this part of the interview. I honestly don't see the value of asking detailed questions like how the OS handles memory allocation and what the difference is between inner join and outer join and the like.. I know for a fact that many interviewers love to delve deep into such details but I think it is an utter waste of time, and even a risky approach. If an interviewee knows all the theory behind programming and databases, that does not tell you anything about whether they are a good programmer or not. It merely reflects their ability to recall information they read in textbooks. And using this approach you may run the risk of losing excellent candidates who do not necessarily have this information readily available in the back of their mind. I have seen many individuals who understand the theoretical concepts very well, but they simply cannot put labels on them. My 1-year-old boy just started to understand the physics of gravity. He knows that if he pushes my laptop off my desk, it will land on the floor (maybe in more than one piece.. but that's another law to learn). But does my son know that this is called gravity? Even long before Newton, people knew about gravity, and they used it in practical applications. But it was Newton who put a label on it and studied it in more detail. And it is really difficult to claim that no one before Newton understood physics because they didn't know the term 'gravity'.

To conclude this part, my recommendation is the following. Use some general fundamental questions as a quick filtering mechanism but don't go deep into any details.

Technology-specific questions

Avoid those at any cost. Unless you need to hire someone with a very specific experience in a certain technology, you want to try to stay away from language-specific or tool-specific questions. For example, if you need someone to maintain a SharePoint server, then they better know something about SharePoint. But if the scope of the job is not that specific, then what you should really be looking for is the ability for the candidate to learn on their own.

But how do you do that? Simple. Ask the candidate to accomplish a specific task using a technology they have never used before. Provide them with all the things that would be available in a real life scenario (e.g. API documentation, access to the internet .. etc). And see how much they can accomplish in a given time frame. You will be surprised by the vastly different levels of learning abilities of your candidates. This kind of exercise is also an excellent way to test their research skills, as well as their stress and time management skills.

In conclusion, what you want to avoid is rejecting a good candidate because they have been using C++ and C# instead of Java for the past five years.Or because they are used to an IDE to build and run their projects instead of a Linux command line.

Problem-solving questions

This should be the main focus of your interview. However, the objective of a problem-solving question should not be to test whether the candidate can reach the correct answer. Simply because if a candidate has heard or read about the question before, they would have a significantly higher chance of getting to the right answer than someone who did not. Also, how you phrase the question might lead different candidates into different directions. What you really want to look for here is whether the candidate is capable of analyzing the problem and communicating their thought process. You want to see if they are able to apply the fundamentals (e.g. recursion, arrays .. etc) to solve the problem at hand. Ask them follow up questions as to how they could improve a given solution, or whether there is a better way of thinking about the problem. Stimulate their brains, and don't hesitate to give hints or put more obstacles depending on how well the candidate is doing.

To summarize, this part of the interview should give you and the candidate a chance to communicate at an intellectual level. The onus is on the candidate to demonstrate that they are capable of using a number of leads to head into the right direction of thinking about the problem at hand.

Finally, as an interviewer with a hiring authority, you will find that it is very tempting for you to show off that you know more than the person in front of you. Interviewers usually like to have fun in interviews by asking all kinds of ridiculous questions and maybe discuss controversial topics. There is nothing wrong with that as long as we draw a clear line between having fun and getting the job of hiring the best candidate done.

In the wide spectrum of interview questions, we can distinguish three different areas that interviewers like to test: fundamentals, technology-specific issues, and problem-solving skills. Depending on the interviewer's style, you may see more or less focus on all three areas. Let's take programming as an example. Questions about fundamentals (i.e. theory) involve topics like object-oriented programming, recursion, data structures... etc. Technology-specific questions on the other hand tackle things like garbage collection in Java, delegates in C#, pointers in C++, update and draw in the XNA Framework .. etc. Lastly, to test problem-solving skills, you see questions that combine the application of fundamentals in solving a relatively abstract problem such as finding all the possible moves in a chessboard.

So, the question is how much focus should we give each of the three areas to determine if the candidate is a good fit for the job (technically speaking)?

Fundamentals

Fundamental questions are important because they tell you something about the background of the person at the other end of the table. If the person cannot tell you what a class is, or what we use inheritance for, then maybe they are interviewing for the wrong job. Having said that, I believe that we generally overdo it when it comes to this part of the interview. I honestly don't see the value of asking detailed questions like how the OS handles memory allocation and what the difference is between inner join and outer join and the like.. I know for a fact that many interviewers love to delve deep into such details but I think it is an utter waste of time, and even a risky approach. If an interviewee knows all the theory behind programming and databases, that does not tell you anything about whether they are a good programmer or not. It merely reflects their ability to recall information they read in textbooks. And using this approach you may run the risk of losing excellent candidates who do not necessarily have this information readily available in the back of their mind. I have seen many individuals who understand the theoretical concepts very well, but they simply cannot put labels on them. My 1-year-old boy just started to understand the physics of gravity. He knows that if he pushes my laptop off my desk, it will land on the floor (maybe in more than one piece.. but that's another law to learn). But does my son know that this is called gravity? Even long before Newton, people knew about gravity, and they used it in practical applications. But it was Newton who put a label on it and studied it in more detail. And it is really difficult to claim that no one before Newton understood physics because they didn't know the term 'gravity'.

To conclude this part, my recommendation is the following. Use some general fundamental questions as a quick filtering mechanism but don't go deep into any details.

Technology-specific questions

Avoid those at any cost. Unless you need to hire someone with a very specific experience in a certain technology, you want to try to stay away from language-specific or tool-specific questions. For example, if you need someone to maintain a SharePoint server, then they better know something about SharePoint. But if the scope of the job is not that specific, then what you should really be looking for is the ability for the candidate to learn on their own.

But how do you do that? Simple. Ask the candidate to accomplish a specific task using a technology they have never used before. Provide them with all the things that would be available in a real life scenario (e.g. API documentation, access to the internet .. etc). And see how much they can accomplish in a given time frame. You will be surprised by the vastly different levels of learning abilities of your candidates. This kind of exercise is also an excellent way to test their research skills, as well as their stress and time management skills.

In conclusion, what you want to avoid is rejecting a good candidate because they have been using C++ and C# instead of Java for the past five years.Or because they are used to an IDE to build and run their projects instead of a Linux command line.

Problem-solving questions

This should be the main focus of your interview. However, the objective of a problem-solving question should not be to test whether the candidate can reach the correct answer. Simply because if a candidate has heard or read about the question before, they would have a significantly higher chance of getting to the right answer than someone who did not. Also, how you phrase the question might lead different candidates into different directions. What you really want to look for here is whether the candidate is capable of analyzing the problem and communicating their thought process. You want to see if they are able to apply the fundamentals (e.g. recursion, arrays .. etc) to solve the problem at hand. Ask them follow up questions as to how they could improve a given solution, or whether there is a better way of thinking about the problem. Stimulate their brains, and don't hesitate to give hints or put more obstacles depending on how well the candidate is doing.

To summarize, this part of the interview should give you and the candidate a chance to communicate at an intellectual level. The onus is on the candidate to demonstrate that they are capable of using a number of leads to head into the right direction of thinking about the problem at hand.

Finally, as an interviewer with a hiring authority, you will find that it is very tempting for you to show off that you know more than the person in front of you. Interviewers usually like to have fun in interviews by asking all kinds of ridiculous questions and maybe discuss controversial topics. There is nothing wrong with that as long as we draw a clear line between having fun and getting the job of hiring the best candidate done.

Thursday, April 5, 2012

Technical interview questions for fresh graduates

Many of my colleagues and my former students have landed jobs in industry in the previous year. 2011 by all standards has been a good year for IT graduates. At least here in Alberta. Interview questions vary widely depending on the company/team/department/job title/interviewer and many other factors.However, based on my recent research and conversations with new hires as well as interviewers, I noticed a stable pattern in recent interviews. Interviewers do not seem to get more creative with their questions - maybe because they don't see the need to. Anyway, I will try to summarize some of the points you may want to know before you go for an interview these days.

There are three different types of what you would label as "technical questions". First, you have the straightforward, purely technical type of questions. For example:

- What is multiple inheritance?

- Describe Polymorphism.

- What are partial classes in C#?

- What are mock objects?

- What are delegates in C#?

- How do you implement multi-threaded applications in Java?

Of course, depending on your answer, you may expect follow-up questions like: What can go wrong with multiple inheritance? How can you go around it in Java?

The second type of questions is supposed to test your problem-solving skills. At the end of the day, you might be able to answer all the theory questions above ('A' students should all do), but you might not necessarily know how to actually use these concepts in problem solving (not all 'A' students can). Here are a few examples from very recent interviews:

Q1. You have a singly-linked list. You do not have access to its head, but you do have a pointer to one of its items. How would go about removing that item from the list?

- The trick here is to understand what is 'exactly' needed. Many interviewees will quickly jump to thinking about how to delete the node that contains the item. Of course you will not be able to do that if you do not have a pointer to the previous node (or at least the head). Otherwise, you will break the linkage in the list. So what do you do? Shift the items one by one to overwrite the item you want to delete and then remove the node at the end of the list.

Q2. How would you model a Chicken in Java?

- Surprisingly enough, this question does not seem to get old. I used this example in one of my software engineering lectures. The next lecture, one of my students told me that she got the same exact question in her interview on that very day! She was very lucky.

There is really no one correct answer to this question. All you need to do is ask questions to understand what qualifies as attributes of the Chicken class (e.g. weight, age, body parts... etc) and what kind of behavior is expected (i.e. member functions like layEgg(), cluck(), getSlaughteredAndGrilledOnMyNewBBQ() .. sounds cruel I know. But be careful here not to do too much in one function. Maybe you should define one function to slaughter and another to grill).

Q3. In an unsorted array of integers, we have the numbers from 1 to 100 stored except for one number. How do you find that number?

- If the array is sorted, it is a simple traversal to check for arr[i+1]-arr[i] != 1.

- If the array is not sorted, then you can add up all the elements and use the mathematical formula to compute the missing element (The sum of the numbers from 1 to N is N * (N+1) /2)

- You can also use a HashTable to find the missing element unless the question specifies that you can't.

Q4. Without using a loop, how do you check if all the characters composing a string are the same? For example "11111" or "bbb"? You can use any built-in string method.

- Substring the given string from the first character until the one before the last. Then substring starting from the second character until the last. Check if the two substrings match.

s.substring(0,s.length()-1).equals(s.substring(1,s.length()))

- Take the first char. Use the replace all method and make sure the result string is empty.

s.replaceAll(s.charAt(0)+"", "")

- You can always use recursion if the question did not specify that you can't.

- If the language provides support for simple conversion, convert the string into an array, and the array into a set. Check that the produced set has only one element.

Q5. How do you efficiently remove duplicate elements from an array/list?

- You need to avoid using any algorithm that is O(n2) or more.

- Put them in a set and put them back in the array/list.

- The question gets very interesting when there are more constraints like not allowing extra storage, maintaining the order of the elements, not allowing sets.. etc.

Good luck!

There are three different types of what you would label as "technical questions". First, you have the straightforward, purely technical type of questions. For example:

- What is multiple inheritance?

- Describe Polymorphism.

- What are partial classes in C#?

- What are mock objects?

- What are delegates in C#?

- How do you implement multi-threaded applications in Java?

Of course, depending on your answer, you may expect follow-up questions like: What can go wrong with multiple inheritance? How can you go around it in Java?

|

| http://dilbert.com/strips/comic/2003-11-27/ |

The second type of questions is supposed to test your problem-solving skills. At the end of the day, you might be able to answer all the theory questions above ('A' students should all do), but you might not necessarily know how to actually use these concepts in problem solving (not all 'A' students can). Here are a few examples from very recent interviews:

Q1. You have a singly-linked list. You do not have access to its head, but you do have a pointer to one of its items. How would go about removing that item from the list?

- The trick here is to understand what is 'exactly' needed. Many interviewees will quickly jump to thinking about how to delete the node that contains the item. Of course you will not be able to do that if you do not have a pointer to the previous node (or at least the head). Otherwise, you will break the linkage in the list. So what do you do? Shift the items one by one to overwrite the item you want to delete and then remove the node at the end of the list.

Q2. How would you model a Chicken in Java?

- Surprisingly enough, this question does not seem to get old. I used this example in one of my software engineering lectures. The next lecture, one of my students told me that she got the same exact question in her interview on that very day! She was very lucky.

There is really no one correct answer to this question. All you need to do is ask questions to understand what qualifies as attributes of the Chicken class (e.g. weight, age, body parts... etc) and what kind of behavior is expected (i.e. member functions like layEgg(), cluck(), getSlaughteredAndGrilledOnMyNewBBQ() .. sounds cruel I know. But be careful here not to do too much in one function. Maybe you should define one function to slaughter and another to grill).

Q3. In an unsorted array of integers, we have the numbers from 1 to 100 stored except for one number. How do you find that number?

- If the array is sorted, it is a simple traversal to check for arr[i+1]-arr[i] != 1.

- If the array is not sorted, then you can add up all the elements and use the mathematical formula to compute the missing element (The sum of the numbers from 1 to N is N * (N+1) /2)

- You can also use a HashTable to find the missing element unless the question specifies that you can't.

Q4. Without using a loop, how do you check if all the characters composing a string are the same? For example "11111" or "bbb"? You can use any built-in string method.

- Substring the given string from the first character until the one before the last. Then substring starting from the second character until the last. Check if the two substrings match.

s.substring(0,s.length()-1).equals(s.substring(1,s.length()))

- Take the first char. Use the replace all method and make sure the result string is empty.

s.replaceAll(s.charAt(0)+"", "")

- You can always use recursion if the question did not specify that you can't.

- If the language provides support for simple conversion, convert the string into an array, and the array into a set. Check that the produced set has only one element.

Q5. How do you efficiently remove duplicate elements from an array/list?

- You need to avoid using any algorithm that is O(n2) or more.

- Put them in a set and put them back in the array/list.

- The question gets very interesting when there are more constraints like not allowing extra storage, maintaining the order of the elements, not allowing sets.. etc.

Good luck!

Tuesday, April 3, 2012

Non-nullable value type error

The Problem

Working with XNA to develop a game for Windows Phone 7, I came across this issue of non-nullable values. What I was trying to do was simple. Given the coordinates of a touch position x and y, give me the color of the object at that position if any. Otherwise, return null.

public Color getObjectColorAtPosition(float x, float y)

{

for every object in the list of objects:

if there is a match with x and y

return the color of the object

//since we did not find any match to the given x and y

return null;

}

But it didn't like it and I got the following error:

"Cannot convert null to 'Microsoft.Xna.Framework.Color' because it is a non-nullable value type"

public Color? getObjectColorAtPosition(float x, float y)

{

for every object in the list of objects:

if there is a match with x and y

return the color of the object

//since we did not find any match to the given x and y

return null;

}

Nothing changed except the question mark next to the return type of the function. In a similar way you can declare Vector2 as:

But then if you want to access the content of the Vector2? object, you will have to do it through the Value field:

vector.Value.X = 4;

vector.Value.Y = 2;

Essentially what you are doing here is wrapping the non-nullable type within a nullable one. The longer method of doing this is by creating a Nullable instance:

Nullable<Vector2> vector = null;

Why is this happening in the first place?

Well.. Ask Microsoft. But here is a quick glimpse. Some language developers think it is a better practice to not allow null as a sentinel value for struct objects or even class instances. This way, the object can be used anywhere in your program without having to verify that it is not null.

Others, including me, think that this is not something the language has to concern itself with. Leave it up to the developer to decide how to deal with null values. Or at least make it the other way around so that the developer has to specify (with a question mark) if an object should be non-nullable.

A funny comment I read on this topic: "what's next? non-zero integral types to avoid divide by zero?"

Working with XNA to develop a game for Windows Phone 7, I came across this issue of non-nullable values. What I was trying to do was simple. Given the coordinates of a touch position x and y, give me the color of the object at that position if any. Otherwise, return null.

public Color getObjectColorAtPosition(float x, float y)

{

for every object in the list of objects:

if there is a match with x and y

return the color of the object

//since we did not find any match to the given x and y

return null;

}

But it didn't like it and I got the following error:

"Cannot convert null to 'Microsoft.Xna.Framework.Color' because it is a non-nullable value type"